For me, 2025 went by very fast, maybe because of the feeling that nothing really changed, or because things still feel the same and we need to rethink our actions and attitudes as human beings and as a society.

Anyway, the year went by and, like any good end-of-year tradition, I couldn’t help but bring back (once again) an article talking about what might show up in the news, in conversations, and in other places you might pass through.

Look: I don’t have a crystal ball, and you can check how my predictions from last year turned out, so you can criticize me and even ignore me before reading everything I’m going to point out here.

But, with all that disclaimer out of the way, here’s my opinion on what we can expect, in terms of hype, for 2026.

1. Programming / Programming Languages

Here I’m going to do the opposite and play devil’s advocate relative to what I said last year. I had said that in 2025 we would possibly see a big wave of excitement around Go and Rust and, apparently, there was some increase, but nothing that really looked like that to me. Worse: in my comparison, Go and Rust simply dropped and couldn’t hold their positions in terms of hype.

Even so, there was growth in usage and in the search for professionals in both languages. Like I did last year, here’s a simple LinkedIn search using the languages as the query.

LinkedIn stats:

| Go in Brazil | Rust in Brazil |

|---|---|

| 5,000+ | 3,000+ |

Probably due to my bias toward Rust, I heard a lot more about its usage than Go (at least I saw more Rust news throughout the year).

In any case, I don’t think we’ll see anything extraordinary in this area in 2026, no big buzz or hype around programming languages. Maybe we’ll get some good news around small improvements, or something that reaches our ears in already-established languages, but nothing that really jumps out.

Something quite common that has been happening—and that we’ll probably see more and more—is established languages borrowing elements and syntax from each other to make developers’ lives easier (this has been going on for years, and we should keep seeing it).

And look, that’s good: we don’t need, every single year, major novelties and drastic changes in a field that’s so vast and already well-established.

Something that might keep impacting programming a bit is the use of AI and agents, but I’ll discuss that in another topic.

Also, it doesn’t seem to me that we’ll see the emergence of any major framework that generates a lot of buzz, especially in already-established languages (they’ve all matured and moved past that phase).

My Take: It seems healthy to keep an eye on languages like Go and Rust, because they can play an important role over the next few years and are probably an efficient path for a career change.

1.1. New Paradigms ?

I decided to include this part not as something to watch in 2025, but maybe in the long term (5 years?).

Either way, these could become language changes that influence our day-to-day a few years from now, because, as I mentioned, some languages gradually incorporate the "good" aspects that others bring.

1.1.2. Algebraic Effects

This change in how we code is something you’ve probably seen before. It’s not mainstream at all, and it’s still barely implemented.

Dan Abramov has an excellent blog post, Algebraic Effects for the Rest of Us, talking about Algebraic Effects back in 2019; so it’s not that recent (but also not old enough to be mainstream).

It’s interesting to note that the OCaml programming language, in version 5.3, added to its syntax the so-called effect handlers, which we can treat as a practical implementation of Algebraic Effects.

Could new languages incorporate this into their syntax in the future?

Ref.: Generalized Evidence Passing for Effect Handlers

My Take: This will probably happen at some point in a few languages; as I said, I don’t believe this is something for 2026, but maybe we’ll see some implementation of this kind within the next 5 years. Possible candidates: JavaScript / TypeScript, Elixir, Scala.

1.1.3. Linear Types

Another bet, much more long term than 2026, because, in this case, it’s a major paradigm shift in how data types and access to them are handled. Most likely, an already-established language wouldn’t change to meet the necessary requirements.

The idea of Linear Types comes in to "fix" the Rust Borrow Checker learning curve; therefore, we might see the rise of new languages with Rust-like power but with a simpler approach, making it easier for new developers to get in.

Let’s see whether the language Austral can prove itself valuable and whether the use of Linear Types will, in fact, be simpler in the long run; maybe even replacing Rust itself.

"Linear types: linear types allow resources to be handled in a provably-safe manner. Memory can be managed safely and without runtime overhead, avoiding double free(), use-after-free errors, and double fetch errors. Other resources like file or database handles can also be handled safely."

Ref.: CS 4110 – Programming Languages and Logics, Lecture #29: Linear Types

My Take: We’re really far from a change like this (even farther than in the Algebraic Effects case). This is more about you, dear reader, being aware that it exists and, if you enjoy studying "different things", exploring the topic.

1.2. Python 3.15

Last year I wrote about what we would observe in 3.14 and, especially, about Python Free-Threading. Well: it became reality and today we have versions 3.14 and 3.14t. Everything according to what was agreed between the Python core devs and the Council, and 3.15 will possibly be the last version where we have this split; next year, when writing about Python 3.16, we’ll talk only about Python without the GIL (I hope 🤞🏻).

1.2.1. JIT

Good news for 3.15 will be improvements to the JIT integrated into CPython using LLVM (Upgraded JIT compiler).

Something very welcome, to reduce criticism and issues related to the language’s performance.

1.2.2. Lazy Imports

One great item that might make it into 3.15 is the PEP 810 – Explicit lazy imports. In particular, the way the PEP describes the feature makes me think this should be the default (but I’m not here to criticize past decisions).

"Lazy imports defer the loading and execution of a module until the first time the imported name is used, in contrast to ‘normal’ imports, which eagerly load and execute a module at the point of the import statement."

1.2.3. Desejos

Some other PEPs I’d like to see implemented in Python, which could still land in 3.15 (maybe) or in future versions.

- PEP 798 – Unpacking in Comprehensions

- PEP 806 – Mixed sync/async context managers with precise async marking

- PEP 505 – None-aware operators

All of these PEPs have discussions currently (or were discussed in the past) on Python Discuss.

My Take: Python, like every other mainstream language used in enterprise, will keep evolving in the coming years and, in Python’s case, good surprises will keep showing up to make us admire this wonderful community even more.

1.3. Spec-Driven-Development (SDD)

Around mid-2025, we heard some mentions about the return of BDD and how it could be adjusted to work with LLMs; that is, you would define behaviors and an LLM would generate the code and, consequently, the tests.

It seems to me that this cooled off over the following months, unless some new tool shows up or existing tools become better integrated into workflows. In Python, we could combine the libraries Behave, Hypothesis and MutMut: create the project with Behave (we write the behavior and an LLM generates the code) and validate the generated code with Hypothesis and MutMut in a more complete and complex way, far beyond "standard" TDD.

And in your favorite programming language, how could this be done? Which libraries would you use?

Ref.: A Comparative Study of LLMs for Gherkin Generation

However, a new concept has been mentioned and raised as a possibility: Spec-Driven-Development.

Because it’s new and still in an experimental phase, some definitions are loose and incomplete; for example, what exactly counts as a specification? But the main idea is to have a technical specification to hand to an LLM, so it can do the coding work based on the spec.

There are already projects like Kiro.dev, enabling the integrated creation of these specifications.

"With SDD, Kiro’s agent writes a full specification of your software before writing any code."

Understanding Spec-Driven-Development: Kiro, spec-kit, and Tessl

Episode 277: Moving Towards Spec-Driven Development

My Take: Both using BDD and this new SDD concept sound like interesting experiments; whether they’ll be effective or truly useful and efficient, only time will tell. But, if it’s possible and viable, it would indeed be something very interesting and, yes, revolutionary.

2. Escaping The Cloud

As far as I can tell, there’s a slight trend (something linear, maybe?) of migrating away from major cloud providers toward on-premise or smaller, more local providers.

In 2023, DHH (We have left the cloud) published a post about leaving the cloud and going back to on-premise servers. I wouldn’t say it was the first, but at the time it caused some commotion; throughout 2025 I saw other moves in that direction.

Something that may accelerate this movement were the recent outages at AWS (How a tiny bug spiraled into a massive outage that took down the internet), Microsoft (Microsoft Azure Outage (Oct 29 2025): Root Cause, Impact and Technical Analysis) and Cloudflare (Cloudflare outage on November 18, 2025). These incidents left thousands of companies and millions of people without access to services and products.

In Brazil, Magazine Luiza created its own public cloud, the Magalu Cloud. Recently, the Globo conglomerate partnered to host its products on Magalu Cloud (Magalu Cloud e Globo Technologies firmam parceria para impulsionar ecossistema de nuvem nacional com alta performance).

My Take: These outages may accelerate larger exits from the major cloud providers (AWS, Google and Microsoft) and other services. Maybe this change makes more sense outside the US (and maybe Europe), in regions like Latin America, Asia and Oceania. Let’s see in 2026 whether this migration continues in a linear way or if we’ll see a more expressive exit due to outages, and whether we won’t have more interruptions this year.

3. Generative AI: Will The Bubble Burst?

Over the last 5 to 6 years, hundreds of billions of dollars have been poured into companies so they could build large natural-language models (so-called LLMs), which creates this feeling of a bubble.

We are definitely in a bubble. I can’t say how big it is, nor exactly when it will burst, but, as I mentioned at some point this year on Mastodon (or on BlueSky, I don’t remember), I believe we still have one or two more years of massive investments flowing in that direction before any bubble actually pops.

That doesn’t mean companies won’t fail or shut down products during this period (that always happens). But the idea of the bubble bursting would be many products, from many companies, having their funding pulled. A big capital withdrawal from the major players, that, I believe, won’t happen in 2026.

I’ll point out what will still suck money in the next year: ideas that still have traction and should take time either to become real (or to be abandoned).

I won’t put them as a separate topic, but we’ll still see companies trying to inject AI into their products. We saw a lot of that in 2025, with some problems (as I’ll mention next), and this trend should continue in 2026; so expect more and more products with an embedded chatbot.

In a recent interview, Google DeepMind CEO Demis Hassabis commented on adding Gemini to many Google products as something positive (The future of intelligence | Demis Hassabis (Co-founder and CEO of DeepMind)).

3.1. Agents

In 2025, I commented that we would probably see a large amount of money and attention going to AI agents; at the time, I talked about this mostly because of Devin. However, it turned out that much of the attention didn’t go to Devin, but to other agent products, especially Claude Code.

I think we can say Anthropic won this AI Agents race, right? Claude Code became one of the main tools sold as state of the art for completing code. And not only that: this, for sure, increased Anthropic’s valuation to the point where the company acquired the Bun runtime (Anthropic acquires Bun as Claude Code reaches $1B milestone).

Most likely, in 2026, we’ll continue to see and be bombarded with AI Agents, and we’ll see success cases and failure cases. For sure, failures will be heavily amplified and exaggerated by everyone, especially by those who are against LLMs.

One example of failure that makes sense to me is that Microsoft recently pulled investments from the Copilot AI Suite. We definitely don’t need LLMs and agents everywhere in our lives. They have their value, but we don’t need them integrated into every piece of software and, much less, at the operating system level, as Microsoft was probably (and inevitably) trying to push onto people.

Agent technology has already proven itself; what still needs to be proven as truly effective is whether LLMs will have enough context and "intelligence" to be 100% effective (there’s no margin for error for a robot).

My Take: We’ll still see lots of news about AI Agents, and not everything has been explored with these tools yet. Possibly, 2025 was a testing phase and now, in 2026, we’ll start seeing more integrations with agents that are already well-established in the market.

3.2. Video Generation

We had many "advances" in this area of Generative AI, in the sense of models made available for end users to generate their own videos. Videos that are basically ultra-realistic and, often, impossible to distinguish from real life.

And, with that, came a gigantic wave of AI slop!

"AI slop (sometimes shortened to just slop) is digital content made with generative artificial intelligence, specifically when perceived to show a lack of effort, quality or deeper meaning, and an overwhelming volume of production."

Today, you can open YouTube Shorts and be flooded with AI-generated videos. One of the main models that fueled this wave of slop was Veo 3. And, not to fall behind, OpenAI launched Sora 2, with an app for you to generate and share videos made by you.

My Take: Even though it was a bit dragged out, I believe we’re entering a new moment in content generation on the Internet. People were worried about LLMs, but now we have models that can generate text, audio and video with extremely realistic quality, which can bring huge implications for the sharing of false information!

3.3. Environmental Problems

As I mentioned last year, this was a topic that stayed very present in 2025. However, there was no practical progress (or only small progress with the BitNet b1.58, LMCache, among others) to mitigate or reduce the carbon footprint of massive LLM usage. It stayed on the agenda in the sense that there were many discussions and concerns about the topic, but, as I said, nothing practical and effective.

Also, at the beginning of the year there was a lot of commotion, especially after the announcement of building new datacenters and a Brazilian government initiative to bring datacenters to Brazil (Entenda o plano de R$ 2 trilhões de Haddad para atrair data centers para o Brasil).

At least this year, some companies started to disclose some information about the consumption that (on average, I believe) LLM models use. But the data was about model inference, that is, how much an average user would spend per query for the model to answer a question.

An interesting comparison Simon Willison made on his blog, assuming the energy cost is really that, shows how much Netflix time would be equivalent to a ChatGPT query.

https://simonwillison.net/2025/Nov/29/chatgpt-netflix/

"Assuming that higher end, a ChatGPT prompt by Sam Altman's estimate uses:

0.34 Wh / (240 Wh / 3600 seconds) = 5.1 seconds of Netflix

Or double that, 10.2 seconds, if you take the lower end of the Netflix estimate instead."

However, it’s well known that inference-only values don’t tell the whole story, right?

In May 2025, MIT’s magazine published this article talking a bit about the energy consumption of these models:

We did the math on AI’s energy footprint. Here’s the story you haven’t heard.

"Some energy is wasted at nearly every exchange through imperfect insulation materials and long cables in between racks of servers, and many buildings use millions of gallons of water (often fresh, potable water) per day in their cooling operations."

And that’s where we get to the wildest point of this whole adventure after money, AI and power.

3.3.1. AI In Space

There is no solution for the uncontrolled energy consumption driven by companies that claim they want good and a better future for humanity. Apparently, thinking about consumption and proposing energy alternatives on Earth would take many years and would likely face several kinds of natural restrictions (and with good reason) brought by environmentalists.

So the solution that, in my view, is truly insane and will consume even more hundreds of millions of Earth’s energy resources would be creating datacenters in SPACE.

"In 10 years, nearly all new data centers will be being built in outer space."

I’ll leave references here and you, dear reader, after consuming them, decide how crazy this is.

- Meet Project Suncatcher, a research moonshot to scale machine learning compute in space.

- Exploring a space-based, scalable AI infrastructure system design

- Towards a future space-based, highly scalable AI infrastructure system design

- How Starcloud Is Bringing Data Centers to Outer Space

- Powerful NVIDIA chip launching to orbit next month to pave way for space-based data centers

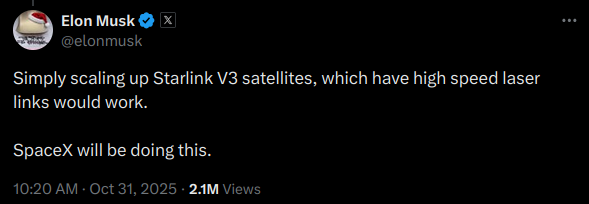

Bezos and Musk Race to Bring Data Centers to Space

"Elon Musk and Jeff Bezos have raced for years to build rockets and launch satellites. Now they’re racing to take the trillion-dollar data-center boom into orbit."

Madness or future? You decide ...

My Take: We’re doomed. Even if we’re in a bubble and it eventually bursts, and many companies fail and products stop existing, a lot of money will be burned on initiatives that will likely bring little effective gain in energy terms. Taking datacenters to space doesn’t mean an energy gain, because it still takes a lot of energy to move any matter off Earth and into orbit. And, even if that were "cheap", there’s still the whole infrastructure need to transport data from space to Earth at speeds minimally compatible with what we have today with fiber.

3.4. The AI Cold War

Even though I don’t believe we’ll have a real war, we’re heading toward a "cultural" war about the use of AI.

Maybe you thought: "Oh, you’re talking about the war between the US and China, because of DeepSeek’s release at the beginning of 2025." No, that’s not what I mean: I’m referring to the war between supporters of AI (here I use the term broadly, not only for LLMs) and those opposed to the technology and the idea of AI-generated content.

Every day we see more discussions where people take extremely radical positions on both sides. These positions usually aim to debate, increase knowledge and prevent uncontrolled dissemination from becoming common, something valid and with which I agree.

Posts like Anthony Moser’s (I Am An AI Hater) have become common, and we see more and more content like that.

Meanwhile, on LinkedIn we see more and more posts of people praising AIs (or rather, LLMs) as saviors of the world.

In the middle of this is, I believe, the broad mass of people who may come across these contents and reflect, or who may just keep living their lives and be bombarded by content where you can’t even tell whether it was generated by AI or not. A clear example is social media itself, where most people don’t care (or rather, are unaware) of the existing problems and traps.

My Take: I believe this split will intensify more and more throughout 2026. We won’t have a physical war, but we will likely see more and more people against any kind of LLM usage (even when it’s correct and sensible). This polarization tends to follow, probably, the same patterns we see today between left and right in politics, or between environmentalists and deniers in the global warming spectrum.

3.5. Evolution And New Architectures

With the success and the limitations of LLMs, new architectures and visions emerged about how and where the Artificial Intelligence field should go. Some of them, in my view, are worth watching so you, dear reader, won’t be surprised if, "out of nowhere", a revolutionary model shows up that "will change society as a whole"; yes, I’m talking about the launch of ChatGPT; it wouldn’t be a surprise to those who followed BERT and Transformer architectures since around 2017, when Google published the paper on the topic.

Here are references for these architectures and ideas that will certainly permeate the collective imagination in 2026. We may indeed see models in use and products adopting them.

3.5.1. World Models

Big tech companies (like Google, NVIDIA and others) have been betting on this idea, and we’ll probably see a lot more news about this kind of architecture.

The main proposal is to have larger models that work with images and generate a concept of the world, instead of only statistically predicting the probability of new tokens.

A large part of the world-models idea also meets the "need" to create humanoid robots that effectively understand the world.

"World models are neural networks that understand the dynamics of the real world, including physics and spatial properties."

What Is a World Model?

Genie 3: A new frontier for world models

3.5.2. SSMs (Mamba)

Mamba is not a recent architecture (its paper dates back to 2023: Mamba: Linear-Time Sequence Modeling with Selective State Spaces), but the idea of replacing Transformers with SSMs still needs to prove itself in practice.

An Introduction to the Mamba LLM Architecture: A New Paradigm in Machine Learning

What is a state space model?

3.5.3. JEPA

Basically, this is an initiative by one of the so-called "godfathers of AI" inside Meta. Yann LeCun, surprised by the capabilities of LLMs, went searching for new architectures to reach AGI.

His proposal is the Joint Embedding Predictive Architecture (JEPA).

I-JEPA: The first AI model based on Yann LeCun’s vision for more human-like AI

While I was writing this post, I looked further and saw that they recently released a new paper: VL-JEPA: Joint Embedding Predictive Architecture for Vision-language.

So academic output around JEPA has been constant, and Yann LeCun has been trying to push this new architecture as a possible evolution toward better models that are more consistent with the complex reality we live in.

My Take: Whether all these architectures will take off or become the new models of the AI hype we’re living through, we still have a few years (probably 2 to 3 years) to see meaningful improvements and products (chatbots) using them. For sure, many researchers and the industry have been looking for alternatives to LLMs, mainly due to the high training and inference costs, which are major disadvantages.

4. Quantum Computing

As I mentioned last year, we’ll keep seeing questions and speculation related to quantum computing, especially related to its use in AI as a possible solution to several problems (like energy consumption) that we still face today.

In my view, even though 2025 brought advances in this area (Top quantum breakthroughs of 2025), we’re still far from seeing quantum computers that are truly relevant and effective.

That doesn’t mean we won’t keep hearing news about quantum computing; some already use the term "quantum advantage" (The dawn of quantum advantage).

"[...] quantum advantage as the ability to execute a task on a quantum computer in a way that satisfies two essential criteria. First, the correctness of the quantum computer's output can be rigorously validated. Second, it is performed with a quantum separation that demonstrates superior efficiency, cost-effectiveness, or accuracy over what is attainable with classical computation alone."

My Take: My comment from last year still stands! Don’t be fooled by the "doomsday prophets"; we’re still far from any quantum computing advance that will affect us in day-to-day life.

5. Neuromorphic Computing

Here we enter the speculation zone at a "tinfoil hat" level: something we probably won’t see in 2026, but that we might hear about through some sparse, slightly crazy or strange news.

"[...] professional services firm PwC notes that neuromorphic computing is an essential technology for organizations to explore since it’s progressing quickly but not yet mature enough to go mainstream."

I don’t know about you, dear reader, but I find it extremely interesting to look for and discover these small drops of insanity that the IT field has been creating over the decades: processes and technologies that show up, are tested, go nowhere and then, years later, come back due to some study or more practical application.

It seems this is the case for neuromorphic computing. Its creation goes back to the 1980s, when it stayed dormant for about 20 years, until new effective studies on the topic surfaced.

"Carver Mead proposed one of the first applications for neuromorphic engineering in the late 1980s."

Apparently, given the success of neural networks, the search for AGI (and now for the ASI (Artificial Superintelligence)) and the limitations many people see in LLMs, other algorithms and architectures are being researched (as mentioned earlier: SSMs, world models and JEPA).

I won’t go into details about what neuromorphic computing is; the IBM article below covers it well and it’s not my goal to explain it here. I’m just bringing information about what we might see or hear in 2026.

What is neuromorphic computing?

My Take: Just like quantum computing, we may see or hear small, sparse fragments about this. For example, a paper describing a model with performance equivalent to a small LLM. It will probably be something isolated, but if it demonstrates efficiency and effectiveness, it can, in the future, become a relevant direction in AI.

6. Final Thoughts

I’ll say it again: these are just ideas and possible technologies we might see during 2026. Some might reach the mainstream, others will remain in their niches and a few scattered news items will float around.

Personally, I like this exercise because it tests my ability to try to predict where the IT field is going. It’s up to you, dear reader, to apply critical thinking and evaluate whether everything presented here makes sense and whether, during 2026, we’ll see news, industry movement, or people moving in the directions described.

Finally, may 2026 be more rigorous and less permissive than 2025.

A big hug 🫂 and happy 2026 🌟🎉 !